CASE STUDY

Getting better results by helping the user build better prompts

With the advent of ChatGPT, new tech and often AI takes precedence, creating a high-stakes environment where rapid testing, iteration, and deployment are the norms. This relentless drive to deliver compounded with the imperative to demonstrate immediate Return on Investment (ROI) can lead to groundbreaking innovations but also unforeseen challenges. This case study delves into a critical issue we encountered post-launch—despite achieving functional success, user satisfaction was starkly negative, prompting a reevaluation of our approach to AI interactions.

Identifying the Challenge

User feedback revealed a significant disconnect: although users were asking the correct questions and receiving accurate data, the context and specificity of their queries often missed the mark. This was exemplified by an incident where a user, a lawyer, employed AI-generated responses that referenced non-existent legal cases. Such scenarios highlighted a critical need for precision in query formulation, particularly in complex fields like law where the difference in regional legislations could dramatically alter the relevance and accuracy of an AI-generated response.

Conceptualization

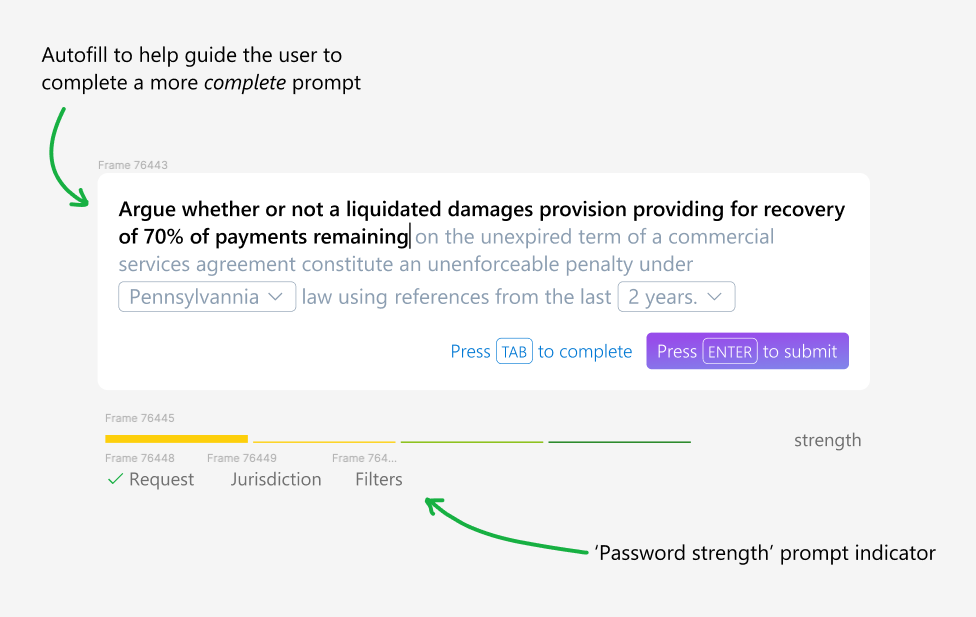

The recurrent theme from user feedback pointed towards a lack of guidance in formulating queries that met specific criteria required for accurate answers. This insight led me to draw parallels with existing digital tools that guide user behavior, such as password strength indicators. These tools not only inform users about the requirements but also guide them to improve their inputs iteratively.

Innovative Solution Development

Inspired by recent technological advancements from Google and Apple in predictive text enhancements, I envisioned a hybrid solution that would integrate the guiding principles of password strength indicators with smart autocomplete functionalities. This approach aimed to subtly direct users towards framing their queries more effectively by providing real-time visual feedback and suggestions.

Prototype and Iteration

Collaboration was key to refining this concept. Initial discussions with lead data and development engineers were promising, yet highlighted significant implementation challenges. Constraints related to current development priorities and resource availability necessitated a reimagined, more streamlined approach. The feedback was invaluable, leading to the reduction of the solution’s scope to focus on core functionalities that could be integrated without overhauling the existing system.

Implementation and Adjustment

The refined solution was a set of enhancements around the query input interface, designed to assist users in constructing their queries by suggesting the order of query components based on common patterns and effectiveness. Although this pared-down solution did not encompass the full original vision, it was a strategic compromise that balanced innovation with feasibility.

Reflection and Forward Path

While the final implementation deviated from the initial grand vision, it established a foundation for more nuanced user assistance within AI platforms. The concept itself, though not fully realized, served as a crucial learning point for future developments. The experience underscored the importance of aligning user needs with technological capabilities, balancing ambitious innovation with practical application.

Conclusion

This case study not only illustrates the challenges faced in pioneering AI solutions but also highlights the importance of iterative design, user feedback, and flexible problem-solving in the tech industry. By continuously striving to understand and enhance user interactions, we pave the way for more intuitive and effective AI systems, reinforcing the value of user-centric design in technological advancements.